Discord Safety News Hub

Crisis Text Line Partnership

Mental wellness is important for everyone. Discord is partnering with Crisis Text Line, a nonprofit that provides 24/7 text-based mental health support and crisis intervention via trained volunteer Crisis Counselors.

If you report a message for self-harm within the Discord mobile app, you will be presented with information on how to connect with a Crisis Text Line volunteer Crisis Counselor. You can also text ‘DISCORD’ to 741741 to reach Crisis Text Line counselors available 24/7 to help you or a friend through any mental health crisis. Our goal is to enable users to get the help they need for themselves, or empower others to get help, as quickly as possible.

Crisis Text Line is available to those in the United States and is offered in both English and Spanish.

Supporting Youth Digital Wellness

We’re constantly improving and expanding our approach to teen safety and wellbeing on Discord. That’s why we’re proud to support the Digital Wellness Lab at Boston Children’s Hospital to help ground our approach to teen safety and belonging in the latest scientific research and best practices. The Digital Wellness Lab at Boston Children’s Hospital convenes leaders in technology, scientific research, healthcare, child development, media, entertainment, and education to deepen understanding and address the future of how young people can engage healthily with media and technology. We’re excited to work with the Digital Wellness Lab to help develop ways to better support teen mental health, both online and off.

Empowering Teens and Families to Build Belonging

Back-to-school season is a great time for families to rethink and reset their relationship with technology. To help out, Discord is rolling out a new program with National PTA to help families and educators discuss ways to foster positive relationships and build belonging in our digital world. Through the program, free resources will be provided, along with breakout activities and digital safety tips for families. As part of National PTA’s PTA Connected program, Discord will also fund 30 grants for local high school PTAs to pilot the program during the 2022–2023 school year.

Parents, want some help starting a conversation about digital safety with your family or at your school? Check out National PTA’s PTA Connected page for everything you need.

Expanding Our Policies

Beyond our work with Crisis Text Line, Digital Wellness Lab, and National PTA, we continue to build out efforts and resources that help people on Discord build belonging and support one another to overcome the impossible. Finding a community that is navigating similar challenges can be incredibly helpful for healing.

That said, platforms have a critical role to play to ensure these digital spaces don’t attempt to normalize or promote hurtful behaviors or encourage other users to engage in acts of self-harm. We recently expanded our Self Harm Encouragement and Promotion Policy to ensure this type of content is addressed on Discord. We do not allow any content or behavior that promotes or encourages individuals to commit acts of self-harm on Discord. We also prohibit content that seeks to normalize self-harming behaviors, as well as content that discourages individuals from seeking help for self-harm behaviors.

We’re committed to mental wellbeing and helping our users uplift each other and their communities. Our newly-implemented partnerships and resources developed with the support of the Crisis Text Line, Digital Wellness Lab, and National PTA are an important addition to our ongoing work to give everyone the power to create space to find belonging in their lives.

Choose what you see and who you want to hang with

Introducing our new Teen Safety Assist initiative: Teen Safety Assist is focused on further protecting teens by introducing a new series of features, including multiple safety alerts and sensitive content filters that will be default enabled for teen users.

Starting next week, we’re excited to release the first two features of the Teen Safety Assist initiative. Teens have shared that they like tools that help them avoid unwanted direct messages (DMs) and media. To support this, we’re rolling out safety alerts on senders and sensitive content filters that automatically blur media that may be sensitive — even if the sender doesn’t initially blur it with a spoiler tag.

- Safety alerts on senders: When a teen receives a DM from a user for the first time, Discord will detect if a safety alert should be sent to the teen for this DM. The safety alert will encourage them to double check if they want to reply, and will provide links to block the user or view more safety tips to safeguard themselves.

- Sensitive content filters: For teens, Discord will automatically blur media that may be sensitive in direct messages and group direct messages with friends, as well as in servers. The blur creates an extra step to encourage teens to use caution when viewing the media. In User Settings > Privacy & Safety, teens will be able to change their sensitive media preferences at any time. Anyone can opt into these filters by going to their Privacy & Safety settings page and changing their sensitive media preferences.

We’ve partnered with technology non-profit Thorn to design these features together based on their guidance on teen online safety behaviors and what works best to help protect teens. Our partnership has focused on empowering teens to take control over safety and how best to guide teens to helpful tips and resources.

We’re grateful for the support of Thorn to ensure we build the right protections and empower teens with the tools and resources they need to have a safer online experience.

The first two features of the Teen Safety initiative will start rolling out globally in November 2023 and will be turned on by default for teen users. Stay tuned for many more features from this initiative as we look ahead to the next year.

Work together to keep each other safe

We know mistakes happen and rules are accidentally broken. Our new Warning System includes multiple touchpoints so users can easily understand rule violations and the consequences. These touchpoints provide more transparency into Discord interventions, letting users know how their violation may impact their overall account standing and gives information for them to do better in the future.

We built this system so users can learn how to do better in the future and help keep all hangouts safe.

- It starts with a DM - Users who break the rules will receive an in-app message directly from Discord letting them know they received either a warning or a violation, based on the severity of what happened and whether or not Discord has taken action.

- Details are one click away - From that message, users will be guided to a detailed modal that, in many cases, will give details of the post that broke our rules, outline actions taken and/or account restrictions, and more info regarding the specific Discord policy or Community Guideline that was violated.

- All info is streamlined in your account standing - In Privacy & Safety settings, all information about past violations can be seen in the new “Account Standing” tab. A user’s account standing is determined based on any active violations and the severity of those violations.

Some violations are more serious than others, and we’ll take appropriate action depending on the severity of the violation. For example, we have and will continue to have a zero-tolerance policy towards violent extremism and content that sexualizes children.

The Warning System is built based on our teen-centric policies. This is a multi-year, focused effort to invest more deeply and holistically into our teen safety efforts. We incorporate teen-centric philosophies in how we enforce our policies, assess risks of new products with Safety by Design, and communicate our policies in an age-appropriate manner.

For the Warning System, this means that we consider how teens are growing, learning, and taking age-appropriate risks as they mature, and we give them more opportunities to learn from mistakes rather than punish them harshly.

The Warning System starts rolling out today in select regions. Stay tuned for many more updates to our Warning System in the future.

For our server moderators and admins, we’ve recently expanded their safety toolkit with new features that allow them to proactively identify potentially unsafe activity, such as server raids and DM spam, and take swift actions as needed.

- Activity Alerts and Security Actions: Activity Alerts notify moderators of a server of abnormal server behavior, particularly what could be massive raids or unusual DM activity. From there, they can investigate further and take action to protect their community as necessary. If it doesn’t seem like an issue, they can easily resolve the alert. Security Actions enable swift server lockdowns by surfacing useful tools quicker, such as temporarily halting new member joins or closing off DMs between non-friend server members.

- Members Page: We’ve reimagined the existing Members page, letting moderators view their members in a more organized way. The Members page now displays relevant safety information about their members like join date, account age, and safety-related flags that we call Signals (such as unusual DM activity or timed out users). We plan on continuing to develop and surface additional relevant Signals. This redesigned Members page appears in a more easily accessible spot above their server’s channel list.

We began rolling these tools in September to Community Servers, and now we’re rolling them out to all servers.

You choose what stays private

While you might be an open book with your friends, having your personal business out there for everyone to see can feel a bit weird. That’s why you get to decide, from server to server, how much you want to share and how easy it is to contact you.

With Server Profiles, you can adjust to how you present yourself in different spaces, allowing you to be as much of the real “you” as you feel comfortable being in any particular group.

You can also always try turning on Message Requests: a feature that sends any DM from someone new into a separate inbox and requires your approval before that user can DM you again. Or, go the extra mile and turn off DMs entirely from server members unless you’ve explicitly added them to your friends list.

We’ve got you

PHEW, okay… that was a lot. Thanks for stickin’ around and jamming through our most recent updates with us. Everything you could ever want to know about safety and privacy on Discord, and even more if you’re the learning type of person, lives in our Safety Library — check it out for more tips on making Discord safe and comfortable for you.

This article was first published on 10/24/2023.

Existing Dangerous and Regulated Goods Policy

Our guidelines state that users may not organize, promote, or engage in the buying, selling, or trading of dangerous and regulated goods. This has always been our policy and will not be changing.

We want to use this opportunity to talk a little bit more about what we consider to be a “dangerous and regulated good” and how this policy will apply:

- We consider a good to be “dangerous” if it has reasonable potential to cause or assist in causing real-world, physical harm to individuals and “regulated” if there are laws in place that restrict the purchase, sale, trade, or ownership of the good. For a good to be covered under this policy, it must be both dangerous and regulated and have the potential to create real-world harm.

- Our definition of “dangerous and regulated goods” includes: real firearms, ammunition, explosives, military-grade tactical gear, controlled substances, marijuana, tobacco, and alcohol. We may expand this list in the future if needed.

Examples and Exceptions

To help put this policy into context, here are some examples of situations we would and would not take action against under this policy:

- Situation: A gaming server has multiple channels for users to connect with each other to talk about and buy, sell, or trade in-game weapons using in-game currencies.

- Analysis: We consider a good to be “dangerous” if it has reasonable potential to cause real-world harm. We would not view guns that are depicted in video games as meeting this criteria, so this server would not be in violation of this policy, and we would take no action against the server.

- Situation: A user posts a message in a server that they have a firearm for sale and includes a link to an off-platform marketplace where they have listed the firearm for sale.

- Analysis: We consider firearms a “dangerous and regulated good” and do not allow any buying, selling, or trading of these goods on Discord. We would take action against the user under this policy and remove the link to the listing.

- Situation: A server has a dedicated channel for users to buy and sell drug paraphernalia, such as smoking pipes and bongs, with one another.

- Analysis: As long as users are not also using the server to buy, sell, or trade controlled substances with one another or using these sales as a means to distribute dangerous and regulated goods, we would not consider this server to be in violation of this policy and would not take action against the server.

Enforcing The Existing Policy

We may take any number of enforcement actions in response to users and servers that are attempting to buy, sell, or trade dangerous and regulated goods on Discord — including warning an account, server, or entire moderator team; removing harmful content; and permanently suspending an account or server.

Looking Ahead

After receiving feedback from our community, we are evaluating the potential for unintended consequences and negative impacts of the age-restricted requirement. Our existing guidelines on Dangerous and Regulated Goods remain as stated, however we are assessing how best to support server moderators regarding the age-restricted requirement and to ensure we are not preventing positive online engagement.

We regularly evaluate and assess our policies to ensure Discord remains a safe and welcoming place, and plan to update our Community Guidelines in the coming months. We will provide any important updates on this policy then.

The full list of content and behaviors not allowed on Discord can be found in our Community Guidelines. Our Safety Center and Policy & Safety Blog are also great resources if you would like to read more about our approach to safety and our policies.

Today, Discord filed an amicus brief with the Supreme Court in support of our ongoing efforts to create online communities of shared interests that are safe, welcoming, and inclusive. The cases at issue (Moody v. NetChoice, et al. and NetChoice et al. v. Paxton) challenge the constitutionality of two new laws in Texas and Florida. You may have heard of them: these laws would significantly restrict the ability of online services to remove content that is objectionable to their users and force them to keep a wide range of “lawful but awful” content on their sites. Discord is not a traditional social media service, but whether intended or not, the laws are broad enough to likely impact services like ours.

It’s hard to overstate the potential impact if these laws are allowed to go into effect: they would fundamentally change how services like Discord operate, and with that, the experiences we deliver to you. There’s no doubt that the outcome here is hugely important to us as a company, but that alone is not why we are taking a stand. Ultimately, we filed this brief because important context was missing from the official record: you all and the vibrant communities you’ve built on Discord.

Taking a step back, Discord has invested heavily in creating safe and fun online spaces. Our Community Guidelines set the rules for how we all engage on Discord, and we put a lot of effort into enforcing them: around 15% of the company works on safety, including content moderation. This includes Trust and Safety agents who enforce Discord’s rules, engineers who develop moderation tools for both Discord and its users, and many more dedicated individuals across our company.

We do this work so that you can create those online spaces where you can find and foster genuine connection. But in order to bring this world to life, Discord needs to be able to prevent, detect, and remove harmful speech and conduct. Barring companies like Discord from moderating content is a little like barring garbage collection in a city: every individual block would have to devote its own resources to hauling its own garbage, diverting those limited resources from more productive activities and the individualized curation that makes each neighborhood unique. So, while our rules and content moderation efforts certainly reflect our own values and speech about the kind of services we want to offer, we really do it for you all—we work to take care of the hard stuff so you all can focus on creating the communities you want.

That’s why we decided to take such a strong position in these cases: we want to make sure the Supreme Court understands how these laws would impact your speech and your communities. Fortunately, the First Amendment has a lot to say about this–it protects your association rights, which make it possible for you to come together around your shared interests and see content that is relevant and helpful, not harmful.

Keeping your communities fun and safe by not allowing harmful content is a top priority for us. We wouldn’t be doing what we do without all of you, and we will keep working to make sure you can create the online spaces you want.

Our Policies

You can read an overview of the following policies here.

Teen Self-Endangerment Policy

Teen self-endangerment is a nuanced issue that we do not take lightly. We want our teen users to be able to express themselves freely on Discord while also taking steps to ensure these users don’t engage in risky behaviors that might endanger their safety and well-being.

In order to help our teenage users stay safe, our policies state that users under the age of 18 are not allowed to send or access any sexually explicit content. Even when this kind of content is shared consensually between teens, there is a risk that self-generated sexual media can be saved and shared outside of their private conversations. We want to help our users avoid finding themselves in these situations.

In this context, we also believe that dating online can result in self-endangerment. Under this policy, teen dating servers are prohibited on Discord and we will take action against users who are engaging in this behavior. Additionally, older teens engaging in the grooming of a younger teen will be reviewed and actioned under our Inappropriate Sexual Conduct with Children and Grooming Policy.

Through our thorough work and partnership with a prominent child safety organization, we determined that we will, when possible, warn teens who have engaged in sexually explicit behavior before moving to a full ban. An example of this includes teens sharing explicit content with each other that is not their own content.

Child Sexual Abuse Material (CSAM) and Child Sexualization Policy

Discord has a zero-tolerance policy for child sexual abuse, which does not have a place on our platform — or anywhere in society.

We do not allow CSAM on Discord, including AI-generated photorealistic CSAM. When such imagery is found, the imagery is reported to the National Center for Missing & Exploited Children (NCMEC). This ensures that the sexualization of children in any context is not normalized by bad actors.

Inappropriate Sexual Conduct with Teens and Grooming Policy

Discord has a zero-tolerance policy for inappropriate sexual conduct with children and grooming. Grooming is inappropriate sexual contact between adults and teens on the platform, with special attention given to predatory behaviors such as online enticement and the sexual extortion of children, commonly referred to as “sextortion.” When we become aware of these types of incidents, we take appropriate action, including banning the accounts of offending adult users and reporting them to NCMEC, which subsequently works with local law enforcement.

How We Work to Keep Our Younger Users Safe

Our Safety team works hard to find and remove abhorrent, harmful content, and take action including banning the users responsible and engaging with the proper authorities.

Discord’s Safety by Design practice includes a risk assessment process during the product development cycle that helps identify and mitigate potential risks to user safety. We recognize that teens have unique vulnerabilities in online settings and this process allows us to better safeguard their experience. Through this process, we think carefully about how product features might disproportionately impact teens and consider whether the product facilitates more teen-to-adult interactions and/or any unintended harm. Our teams identify and strategize ways to mitigate safety risks with internal safety technology solutions during this process and through getting insight and recommendations from external partners.

Discord uses a mix of proactive and reactive tools to remove content that violates our policies, from the use of advanced technology like machine learning models and PhotoDNA image hashing to partnering with community moderators to uphold our policies and providing in-platform reporting mechanisms to surface violations.

Our Terms of Service require people to be over a minimum age (13, unless local legislation mandates an older age) to access our website. We use an age gate that asks users to provide their date of birth upon creating an account. If a user is reported as being under 13, we delete their account. Users can only appeal to restore their account by providing an official ID document to verify their age. Additionally, we require that all age-restricted content is placed in a channel clearly labeled as age-restricted, which triggers an age gate, preventing users under 18 from accessing that content.

The newest launches of safety products are focused on helping to safeguard the teen experience with our safety alerts on senders that educate teens on ways they can control their experience and limit unwanted contact. Additionally, sensitive content filters provide additional controls for teens to blur or block unwanted content. Rather than assigning prescriptive solutions for teens, we aim to empower teens with respect for their agency, privacy, and decision-making power.

Additionally, we are expanding our own Trusted Reporter Network in collaboration with INHOPE for direct communication with expert third parties, including researchers, industry peers, and journalists for intelligence sharing.

Our Technology Solutions

Machine learning has proven to be an essential component of safety solutions at Discord. Since our acquisition of Sentropy, a leader in AI-powered moderation systems, we have continued to balance technology with the judgment and contextual assessment of highly trained employees, along with continuing to maintain our strong stance on user privacy.

Below is an overview of some of our key investments in technology:

Teen Safety Assist: Teen Safety Assist is focused on further protecting teens by introducing a new series of features, including multiple safety alerts and sensitive content filters that will be default-enabled for teen users.

Safety Rules Engine: The Safety Rules Engine allows our teams to evaluate user activities on the platform, including registrations, server joins, and other metadata. We then analyze patterns of problematic behavior to make informed decisions and take uniform actions like user challenges or bans.

AutoMod: Automod is a powerful tool for community moderators to automatically filter unwanted messages with certain keywords, block profiles that may be impersonating a server admin or appear harmful to their server, and detect harmful messages using machine learning. This technology empowers community moderators to keep their communities safe without having to spend long hours manually reviewing and removing unwanted content on their servers.

Visual safety platform: Discord has continued to invest in improving image classifiers for content such as sexual, gore, and violent media. With these, our machine learning models are being improved to reach higher precision detection rates with lower error rates and decrease teen exposure to potentially inappropriate and mature content. We proactively scan images uploaded to our platform using PhotoDNA to detect child sexual abuse material (CSAM) and report any CSAM content and perpetrators to NCMEC, who subsequently work with local law enforcement to take appropriate action.

Investing in technological advancements and tools to proactively detect CSAM and grooming is a key priority for us, and we have a dedicated team to handle related content. In Q2 2023, we proactively removed 99% of servers found to be hosting CSAM. You can find more information in Discord’s latest Transparency Report.

There is constant innovation taking place within and beyond Discord to improve how companies can effectively scale and deliver content moderation. Our approach will continue to evolve as time goes on, as we’re constantly finding new ways to do better for our users.

Partnerships

We know that collaboration is important, and we’re continuously working with experts and partners so that we have a holistic and informed approach to combating the sexual exploitation of children. We’re grateful to collaborate with the Tech Coalition and NoFiltr to help young people stay safer on Discord.

With our new series of products under the Teen Safety Assist initiative, Discord has launched a safety alert for teens to help flag when a teen might want to double-check that they want to reply to a particular new direct message. We’ve partnered with technology non-profit Thorn to design these features together based on their guidance on teen online safety behaviors and what works best to help protect teens. Our partnership is focused on empowering teens to take control over safety and how best to guide teens to helpful tips and resources.

Additional Resources

Want to learn more about Discord’s safety work? Check out these resources below:

- How we support youth mental health

- We recently launched our Teen Safety Assist initiative, which includes new products such as safety alerts and sensitive content filters.

- Check out the Family Center, a new opt-in tool that makes it easy for teens to keep their parents and guardians informed about their Discord activity while respecting their privacy and autonomy.

- Parents can check out ConnectSafely’s Parent’s Guide to Discord.

- Our friends at NoFiltr have tons of great activities and content to help you navigate your way online.

- Explore our new Safety Center and Parent Hub for more resources.

We look forward to continuing this important work and deepening our partnerships to ensure we continue to have a holistic and nuanced approach to teen and child safety.

Threats, Hate Speech, and Violent Extremism

Hate or harm targeted at individuals or communities is not tolerated on Discord in any way, and combating this behavior and content is a top priority for us. We evolved our Threats Policy to address threats of harm to others. Under this policy, we will take action against direct and indirect threats, veiled threats, those who encourage this behavior, and conditional statements to cause harm.

We also refined our Hate Speech Policy with input from a group of experts who study identity and intersectionality and came from a variety of different identities and backgrounds themselves. Under this policy, we define “hate speech” as any form of expression that denigrates, vilifies, or dehumanizes; promotes intense, irrational feelings of enmity or hatred; or incites harm against people based on protected characteristics.

In addition, we’ve expanded our list of protected characteristics to go beyond what most hate speech laws cover to include the following: age; caste; color; disability; ethnicity; family responsibilities; gender; gender identity; housing status; national origin; race; refugee or immigration status; religious affiliation; serious illness; sex; sexual orientation; socioeconomic class and status; source of income; status as a victim of domestic violence, sexual violence, or stalking; and, weight and size.

Last year, we wrote about how we address Violent Extremism on Discord. We are deeply committed to our work in this space and have updated our Violent Extremism Policy to prohibit any kind of support, promotion, or organization of violent extremist groups or ideologies. We worked closely with a violent extremism subject-matter expert to update this policy and will continue to work with third-party organizations like the Global Internet Forum to Counter Terrorism and the European Union Internet Forum to ensure it is enforced properly.

Financial Scams, Fraud Services, and Malicious Conduct

During 2022, we invested significantly in how we combat criminal activity on Discord. In February, we released two blogs to help users identify and protect themselves against scams on Discord. Alongside these, we developed a Financial Scams Policy which prohibits three types of common scams: identity scams, investment scams, and financial scams. We will take action against users who scam others or use Discord to coordinate scamming operations. In the case of users who have been the victim of fraudulent activity, we recommend they report the activity to Discord and contact law enforcement, who will be able to follow up with us for more information if it helps their investigation.

Under our new Fraud Services Policy, we've expanded our definitions of what constitutes fraudulent behavior. We will take action against users who engage in reselling stolen or ill-gotten digital goods or are involved in coordinated efforts to defraud businesses or engage in price gouging, forgery, or money laundering.

We also do not allow any kind of activity that could damage or compromise the security of an account, computer network, or system under our updated Malicious Conduct Policy. We will take action against users who use Discord to host or distribute malware, or carry out phishing attempts and denial-of-service attacks against others.

What’s Next

User safety is our top priority and we’re committed to ensuring Discord continues to be a safe and welcoming place. We regularly evaluate and assess our policies in collaboration with experts, industry-leading groups, and partner organizations. We look forward to continuing our work in this important area and plan to share further updates down the road.

The work we've done

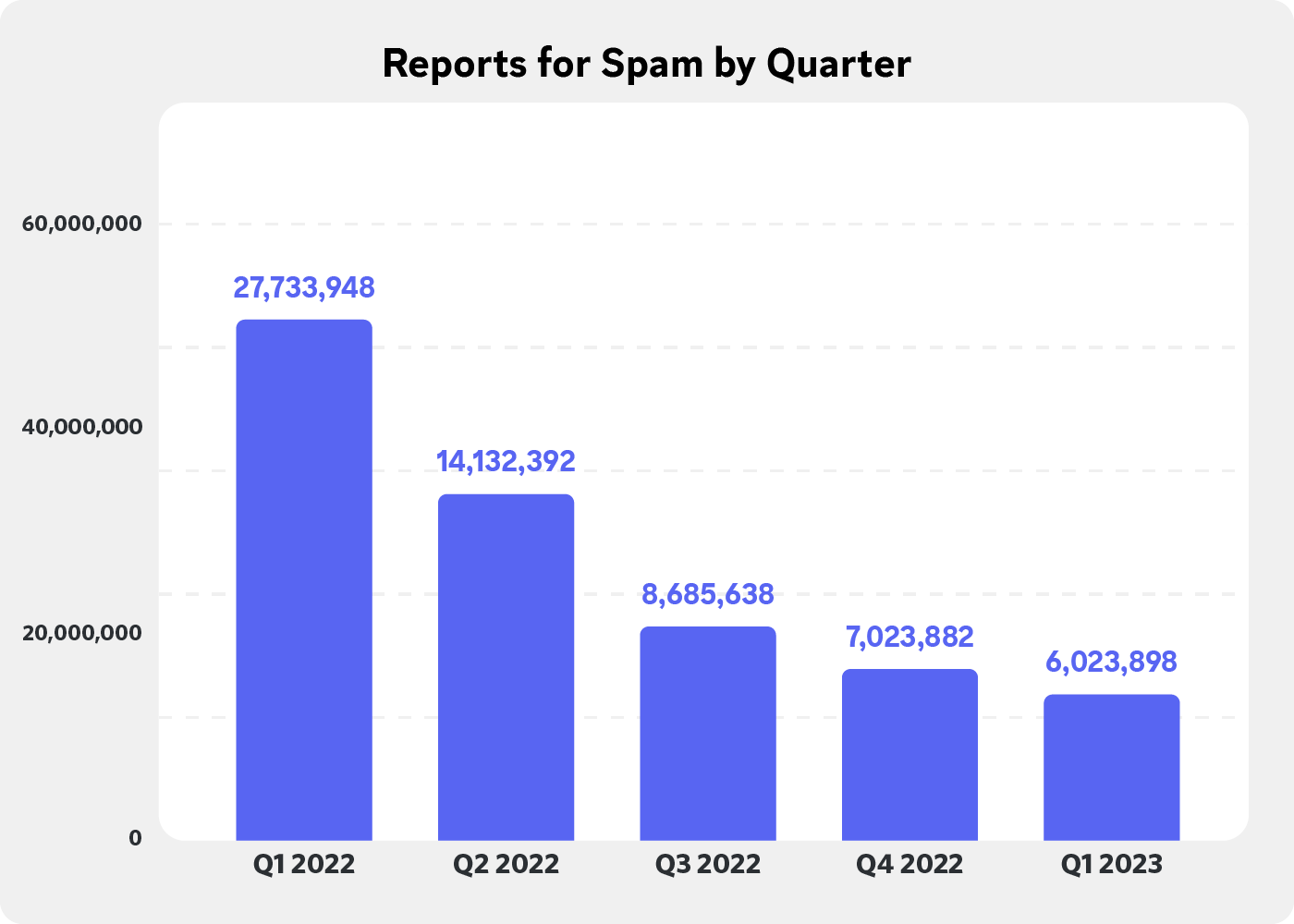

We invested a substantial amount of time and resources into combating spam in 2022, disabling 141,087,602 accounts for spam or spam-related offenses, with a proactive removal rate of 99%, before we receive a user report.

We observed a significant increase in the number of accounts disabled during the third quarter of 2022, leading to an elevated but lower fourth quarter, and then to a substantial decrease in the first quarter of 2023. These trends can be attributed to both increased and ongoing investments in our anti-spam team and a shift in our approach to reducing the number of disabled accounts by placing greater confidence in our proactive spam mitigations and avoiding false positives. As a result, only .004% of accounts disabled submitted an appeal during the past quarter.

Spammers are constantly evolving and adapting to new technologies and methods of detection, moving to use new parts of the platform to target users. Combating spam requires a multi-faceted approach that employs new tools and technologies, while also remaining vigilant and adaptable to changing trends. We're constantly updating our approach to remove spam, and as our proactive efforts have increased, we've observed a steady decline in the number of reports we receive for spam.

New features, products, and tools to help combat spam on Discord

The above trends were made possible over the past year due to a number of technological advances in both back-end and front-end features, products, and tools to help prevent and remove spam from Discord.

- AutoMod: We launched AutoMod which enables server owners to set rules to better detect spam in their servers. This filter, powered by machine learning, is informed by spam messages that have been previously reported to us.

- DM spam filter: We recently launched a DM spam filter that enables users to filter messages detected as spam into a separate spam inbox.

- Friend Request clear all: We also launched a new feature to allow people to clear out all their friend requests with the click of a button.

- Anti-Raid protection for servers: We’ve been developing and investing in features to help reduce server raids based on feedback from the community to stay on top of an ever-changing landscape.

- Actions beyond bans: We've developed new methods to remediate spam on Discord by creating interventions that don't exclusively result in disabled accounts. This includes account quarantines and other blockers on suspicious accounts.

Our commitment to this work

Discord is committed to combating spam and building a safer environment for people to find belonging. We've grown our teams, shipped new features, products, and tools, and continue to listen closely to users about how spam impacts their experience on Discord.

We're constantly updating our approach to remove spam and remain vigilant and adaptable to changing trends. We will continue to prioritize safety and work hard to combat spam in order to help make Discord the best place for people and communities to hang out online.

We’re bridging a key gap in CSAM detection

To understand how the tech industry is working to solve these challenges, it’s helpful to understand the technology itself.

The industry standard for CSAM detection is a tool called PhotoDNA, which was developed by Microsoft and donated to the National Center for Missing & Exploited Children (NCMEC)—the nationally recognized hub for reporting suspected online child sexual exploitation in the US.

When imagery depicting child sexual abuse is reported to NCMEC, PhotoDNA is used to create a unique digital signature (or “hash”) of that image. That hash—and not the illegal imagery itself—is stored in a database that serves as a reference point for online detection efforts.

At Discord, we proactively scan images using hashing algorithms (including PhotoDNA) to detect known CSAM. For example, if someone uploads an image that matches against known CSAM that has been previously reported, we automatically detect it, remove it, and flag it for internal review. When we confirm that someone attempted to share illegal imagery, we remove the content, permanently ban them from our platform, and report them to NCMEC, who subsequently works with law enforcement.

There are more than 6.3 million hashes of CSAM in NCMEC’s hash database and more than 29 million hashes of CSAM contained in databases from around the world. That gives the tech industry a strong foundation for stopping the spread of this harmful material on our platforms. But there’s one key limitation: it only detects exact or similar copies of images that are already logged in the database. This means that predatory actors will try to exploit this weakness with unknown CSAM that has yet to be seen or logged, indicating that there is either a likelihood of active abuse, or the images were synthetically generated using AI.

Machine learning helps us stop CSAM at scale

Unknown CSAM has continually plagued our industry and our team felt it was important to advance our own detection capabilities. We started with an open-source model that can analyze images to understand what they are depicting. Our engineers experimented and found that we could use this model to not only determine how similar one image is to another, but due to the semantic understanding of the model, it also demonstrated promising results for novel CSAM detection.

When we find a match, we follow the same process that we use for PhotoDNA matches: A human reviews it to verify if it is an instance of CSAM. If it is, it’s reported to NCMEC and added to the PhotoDNA database. From that point, the newly verified image can be detected automatically across platforms. This speeds up the review process, expands the database of known CSAM, and makes everyone better and faster at blocking CSAM over time. This is a major advancement in the effort to detect, report, and stop the spread of CSAM.

At a recent Tech Coalition hackathon, engineers from Discord and other tech companies collaborated on ways we can work together to identify new forms of CSAM that evade existing detection methods. During this collaboration, we were able to work to make the AI-powered detection mechanism we built open-source. At Discord, we believe safety shouldn’t be proprietary or a competitive advantage. Our hope is that by making this technology open source, we can bring new capabilities and protections to all tech platforms, especially newer companies that don’t yet have established trust and safety teams.

This is part of our work alongside the Lantern program, which is committed to stopping people from evading CSAM detection across platforms. Together, we’re working to increase prevention and detection methods, accelerate identification processes, share information on predatory tactics, and strengthen our collective reporting to authorities.

Ultimately, this is an ideal use of machine learning technology. It’s important to note, though, that human expertise and judgment is still involved. Our expert staff continues to review anything that’s not an exact match with PhotoDNA, because the stakes are high and we need to get it right. What matters most is that the combined efforts of machine learning and human expertise are making it harder than ever for predatory actors to evade detection.

We’re working to build a safer internet. It’s in our DNA.

Safety is a top priority at Discord. It’s reflected in our personnel: roughly 15% of our staff is dedicated to safety. These teams work to ensure our platform, features, and policies result in a positive and safe place for our users to hang out and build community.

We’re stronger together

The safety landscape is continually shifting. There are always new challenges emerging, and no single entity can tackle them alone.

Our industry can lead by sharing tools, intelligence, and best practices. We also need to work collaboratively with policymakers, advocacy groups, and academics to fully understand emerging threats and respond to them. Initiatives like Lantern and organizations like the Tech Coalition represent the kind of collaborative efforts that make it possible for us all to better stay ahead of bad actors and work together towards a universally safe online experience for everyone.

What it means to start with safety

As soon as we start building something new, we assess the risks that might come from it. By starting at the concept phase, we can factor safety into everything we do as we design, build, launch, and manage a new product.

Throughout the process, our teams weigh in with specialized knowledge in their domains, including teen safety, counter-extremism, cybercrime, security, privacy, and spam. Functionally, this is how we pinpoint any gaps that may exist as we build the products and features that make Discord a fun, safe place to hang out with friends.

Four key questions for building safer products

Any time we build something, we prioritize four key questions to determine our approach to building safer products:

- How will we detect harmful activity or content, and balance the need for safety, security, and privacy?

- How will we review the content or activity we detect? This includes understanding how we will weigh it against our policies, guidelines, or frameworks, and how we will determine the severity of the harm.

- How will we enforce our rules against actions that we find to be harmful?

- How will we measure the outcomes and efficacy of decisions? We want to ensure our safety efforts work well, that we reevaluate them when we need to, and that we hold ourselves accountable for delivering safe experiences for our users.

There are some things we consider with each product. We’ll look, for instance, at our data to learn more about the most common kinds of violations that are related to what we’re building. That can help us understand the most harmful and most abundant violations we know about today.

But our products don’t all have the same behaviors or content, so we can’t just copy-paste our work from one tool to another. Think of the Family Center or voice messages, for example. They have unique purposes and functions, so we needed different answers to each of the questions above as we built them. By starting with these questions on day one, we were able to make the right decisions for each tool at each step of the process and maximize our approach to safety in the end.

By following this approach, everyone involved in making our products has a clear-eyed and honest understanding of the risks that could potentially arise from our work. We make intentional decisions to minimize those risks and commit to only releasing something if it meets our high standard of safety.

What starting with safety looks like in practice

Let’s look a little deeper at voice messages, the feature that lets you record audio on your phone and easily send it to your friends. Here’s a brief rundown of how we incorporated safety conversations into every aspect of bringing this fun tool to life.

At the earliest stage, the product team drew up preliminary specifications and consulted with trust and safety subject matter experts on staff. The product manager shared the specs with individuals from the policy and legal teams, with a focus on flagging any concerns or discrepancies regarding Discord’s own policies.

Once initial feedback was incorporated, the product manager created an action plan, bringing on the engineers who began building the feature. Even during this stage, safety experts were part of the process. In fact, it was here that members of the safety team called for an additional requirement—a way for us to handle large amounts of audio messages. Just as users can report written messages, they also can report audio messages for content that violates our policies. Our safety teams needed to ensure we had a way to review those reports effectively as they flow in.

In this instance, the team had to figure out how the audio review process would even work. Would reviewers have to sit and listen to hours of reported messages each day? That didn’t seem viable, so the core team decided to use existing text tools that could transcribe reported messages. Now reviewers can evaluate the text quickly, and review the audio for additional context.

Once again, the product manager and engineers worked closely with the safety team to include building in this additional layer of safety.

Just because our voice messages feature is live doesn’t mean our safety job is complete. We need to understand how effective our methods are, so we’ve developed metrics that track safety and give us insight into the impacts of the decisions we’ve made.

We track, for instance, if we have unusual rates of flagged content or appeals on decisions, as well as the breakdown of various violations on our platform. We summarize these metrics in our quarterly Transparency Reports.

What it doesn’t look like

To better understand our safety-centric approach, consider the alternative.

A different platform could start with an innovative idea and the best intentions. It could build the product it thinks would be most exciting for users, and review the risk and safety implications when it’s ready to go live. If the company identifies risks at a late stage, it has to decide what to address before releasing the product to the world.

This can happen and is flawed. If you’re already at the finish line when you notice the risks you’ve created, you might realize you have to start over to do it right. Building a product this way is risky, and users are the ones most exposed to those risks.

How Discord starts with safety

At Discord, we understand that we have to think about safety and growth holistically.

Discord is built differently from other platforms. We don't chase virality or impressions. Discord makes money by providing users a great experience through premium subscriptions. Under this model, we can focus on our users and have more freedom to prioritize safety.

Put simply, we’re only interested in growing a platform that’s safe. We’re here to help people forge genuine friendships, and we know that can only happen when people feel safe connecting with one another.

Recognizing Safer Internet Day

Safer Internet Day was created by advocacy organizations as a way to raise awareness around online safety. It has since expanded into an opportunity for industry, government, and civil society to come together to share online safety and digital well-being best practices.

Making the internet a better, safer place for everyone is a tall task — and we know we can’t do it alone. Last year, as part of our ongoing partnership with the National Parent Teacher Association, we co-hosted an event featuring some of our teen moderators talking about belonging and mental health in online spaces. We’ve continued this partnership with the PTA Connected: Build up and Belong Program. This program helps families explore the use of technology as a communication and relationship tool, discusses ways to build belonging and positive communities in our digital world, teaches how to navigate privacy and safety on digital platforms, and helps families have interactive conversations about online scenarios, experiences and expectations.

We’re committed to helping build a safer internet on Safer Internet Day and beyond. To continue that mission, Discord has partnered with several international leaders in the online safety space.

Our Work with NoFiltr

This year, we’re teaming up with NoFiltr to help educate Discord users on the importance of safer internet practices to empower people to use Discord and other platforms in a smart and healthy manner. One of the ways we’ll be working together is by engaging with NoFiltr’s Youth Innovation Council to co-develop educational resources that connect to users where they are.

For this year’s Safer Internet Day, Discord and NoFiltr, with help from the Youth Innovation Council, are launching the “What’s Your Online Digital Role?” quiz. We believe that everyone can play a part in helping make online communities a safe and inclusive space, and this interactive quiz can help you figure out what role best suits you when it comes to being a part of and building a safe community. Take the quiz here to find out.

International Partnerships and Public Policy Roundtable

Discord is partnering with Childnet UK and Internet Sans Crainte, two European Safer Internet Centers dedicated to increasing awareness and education about better online safety practices for youth.

In addition, we’ll be hosting a roundtable event in Brussels where policymakers, civil society thought leaders, and industry partners will come together to share insights, discuss challenges, and discuss steps we can take together to make Discord and the internet a safer place for young people.

Engaging our Communities with Educational Activities

We also wanted to provide materials and tools that everyone can use to help facilitate healthy and open conversation about online safety — it’s not so easy approaching difficult or sensitive topics when it comes to talking about your experiences online.

To help kick start these important discussions in a more approachable way, we’ve made a print-at-home fortune teller filled with questions and icebreaker prompts that can help you lead a conversation about better digital health and safer online practices.

Interested in your own Safer Internet Day fortune teller? Check out our Safer Internet Day home page on Discord’s Safety Center, where you can print out and assemble your very own fortune teller.

We’ll be showcasing how to use this resource with our communities as part of our Safer Internet Day celebration.

Discord Town Hall Public Event

We’ll be celebrating Safer Internet Day in the publicly-joinable Discord Town Hall server with an event all about Safety, including a walk-through of the fortune teller activity. You can join Discord official Town Hall server for the event happening on February 7th, 2023.

Discord’s Commitment to a Safer Space

At Discord, we are deeply committed to helping communities thrive on our platform. Our teams are always working to make Discord an even more accessible and welcoming place to hang out online with your friends and build your community. Here are a couple of safety highlights from the last year:

AutoMod: Making Your Communities Safer Has Never Been Easier

In June, we debuted our new automated moderation tool, AutoMod, for community owners and admins looking for an easier way to keep communities and conversations welcoming and to empower them to better handle bad actors who distribute malicious language, harmful scams or links, and spam.

Today, AutoMod is hard at work in over 390,000 servers, reducing the workload for thousands of community moderators everywhere. So far, we’ve removed over 20 million unwanted messages — that’s 20 million less actions keeping moderators away from actually enjoying and participating in the servers they tend to. Check out our AutoMod blog post if you’re interested in getting AutoMod up and running in your community.

The Great Spam Removal

Spam is a problem on Discord, and we treat this issue with the same level as any other problem that impacts your ability to talk and hang out on Discord. That’s why this year, we’ve increased the amount of time and resources into refining our policies and operational procedures to more efficiently and accurately target bad actors. We’ve had over 140 million spam takedowns in 2022, and we’ll continue to remove spam from our platform in the future. Read more about Discord’s efforts to combat spam on our platform here.

More Safety Resources

Wanna learn more about online safety? How you can keep yourself and others safer online? We’ve gathered these resources to help give you a head start:

- Check out our Safety Center for more resources and guides.

- Are you a Discord community owner? This article from our Community Portal is all about how to keep your Discord Community safer.

- Our friends at NoFiltr have tons of great activities and content to help you navigate your way online.

- Interested in what else is going on for Safer Internet Day? Learn more about Safer Internet Day here!

- Parents can check out ConnectSafely’s Parent’s Guide to Discord.

We’re committed to making Discord a safe place for teens to hang out with their friends online. While they’re doing their thing and we’re doing our part to keep them safe, sometimes it’s hard for parents to know what’s actually going on in their teens’ online lives.

Teens navigate the online world with a level of expertise that is often underestimated by the adults in their lives. For parents, it may be a hard lesson to fathom—that their teens know best. But why wouldn’t they? Every teen is their own leading expert in their life experiences (as we all are!). But growing up online, this generation is particularly adept at what it means to make new friends, find community, express their authentic selves, and test boundaries—all online.

But that doesn’t mean teens don’t need adults’ help when it comes to setting healthy digital boundaries. And it doesn’t mean parents can’t be a guide for cultivating safe, age-appropriate spaces. It’s about finding the right balance between giving teens agency while creating the right moments to check in with them.

One of the best ways to do that is to encourage more regular, open and honest conversations with your teen about staying safe online. Here at Discord, we’ve developed tools to help that process, like our Family Center: an opt-in program that makes it easy for parents and guardians to be informed about their teen’s Discord activity while respecting their autonomy.

Here are a few more ways to kick off online safety discussions.

Let your curiosity—not your judgment—guide you

If a teen feels like they could get in trouble for something, they won’t be honest with you. So go into these conversations from a place of curiosity first, rather than judgment.

Here are a few conversation-starters:

- Give me a tour of your Discord. Tell me what you're seeing.

- What kind of communities are you in? Where are you having the most fun?

- What are your settings like? Do you feel like people can randomly reach out to you?

- Have there been times when you've been confused by how someone interacted with you? Were you worried about some of the messages that you've seen in the past?

Teens will be less likely to share if they feel like parents just don’t get it, so asking open questions like these will foster more conversation. Questions rooted in blame can also backfire: the teen may not be as forthcoming because they feel like the adult is already gearing up to punish them.

Read more helpful prompts for talking with your teen about online safety in our Discord Safety Center.

View your teen as an expert of their experience online

Our goal at Discord is to make it a place where teens can talk and hang out with their friends in a safe, fun way. It’s a place where teens have power and agency, where they get to feel like they own something.

Just because your teen is having fun online doesn't mean you have to give up your parental role. Parents and trusted adults in a teen’s life are here to coach and guide them, enabling them to explore themselves and find out who they are—while giving them the parameters by which to do so.

On Discord, some of those boundaries could include:

- Turning on Direct Message Requests to make sure strangers can’t message them on larger servers without going to a separate inbox to be approved, first.

- Creating different server profiles for servers that aren’t just their close friends. You and your teen can discuss how much information should be disclosed on different servers, for example by using different nicknames or disclosing pronouns on a server-by-server basis.

Using Discord’s Family Center feature so you can be more informed and involved in your teens’ online life without prying.

Create more excuses to have safety conversations

At Discord, we’ve created several tools to help parents stay informed and in touch with their teens online, including this Parent’s Guide to Discord and the Family Center.

In the spirit of meeting teens where they are, we’ve also introduced a lighthearted way to spur conversations through a set of digital safety tarot cards. Popular with Gen Z, tarot cards are a fun way for teens to self-reflect and find meaning in a world that can feel out of control.

The messages shown in the cards encourage teens to be kind and to use their intuition and trust their instincts. They remind teens to fire up their empathy, while also reminding them it’s OK to block those who bring you down.

And no, these cards will (unfortunately) not tell you your future! But they’re a fun way to initiate discussions about online safety and establish a neutral, welcoming space for your teen to share their concerns. They encourage teens to share real-life experiences and stories of online encounters, both positive and negative. The idea is to get young people talking, and parents listening.

Know that help is always available

Sometimes, even as adults, it's easy to get in over your head online. Through our research with parents and teens, we found that while 30% of parents said their Gen Zer’s emotional and mental health had taken a turn for the worse in the past few years, 55% of Gen Z said it. And while some teens acknowledged that being extremely online can contribute to that, more reported that online communications platforms, including social media, play a positive role in their life through providing meaningful community connection. Understanding healthy digital boundaries and how they can impact mental wellbeing is important, no matter if you’re a teen, parent, or any age in-between.

When it comes to addressing the unique safety needs of each individual, there are resources, such as Crisis Text Line. Trained volunteer Crisis Counselors are available to support anyone in their time of need 24/7. Just text DISCORD to 741741 to receive free and confidential mental health support in English and Spanish.

Summary of Current Situation

Because investigations are ongoing, we can only share limited details. What we can say is that the alleged documents were initially shared in a small, invite-only server on Discord. The original server has been deleted, but the materials have since appeared in several additional servers.

Our Terms of Service expressly prohibit using Discord for illegal or criminal purposes. This includes the sharing of documents that may be verifiably classified. Unlike the overwhelming majority of the people who find community on Discord, this group departed from the norms, broke our rules, and did so in apparent violation of the law.

Our mission is for Discord to be the place to hang out with friends online. With that mission comes a responsibility to make Discord a safe and positive place for our users. For example, our policies clearly outline that hate speech, threats and violent extremism have no place on our platform. When Discord’s Trust and Safety team learns of content that violates our rules, we act quickly to remove it. In this instance, we have banned users involved with the original distribution of the materials, deleted content deemed to be against our Terms, and issued warnings to users who continue to share the materials in question.

Our Approach to Delivering a Positive User Experience

This recent incident fundamentally represents a misuse of our platform and a violation of our platform rules. Our Terms of Service and Community Guidelines provide the universal rules for what is acceptable activity and content on Discord. When we become aware of violations to our policies, we take action.

The core of our mission is to give everyone the power to find and create belonging in their lives. Creating a safe environment on Discord is essential to achieve this, and is one of the ways we prevent misuse of our platform. Safety is at the core of everything we do and a primary area of investment as a business:

- We invest talent and resources towards safety efforts. From Safety and Policy to Engineering, Data, and Product teams, about 15 percent of all Discord employees are dedicated to working on safety. Creating a safer internet is at the heart of our collective mission.

- We continue to innovate how we scale safety mechanisms, with a focus on proactive detection. Millions of people around the world use Discord every day, the vast majority are engaged in positive ways, but we take action on multiple fronts to address bad behavior and harmful content. For example, we use PhotoDNA image hashing to identify inappropriate images; we use advanced technology like machine learning models to identify and remedy offending content; and we empower and equip community moderators with tools and training to uphold our policies in their communities. You can read more about our safety initiatives and priorities below.

- Our ongoing work to protect users is conducted in collaboration and partnership with experts who share our mission to create a safer internet. We partner with a number of organizations to jointly confront challenges impacting internet users at large. For example, we partner with the Family Online Safety Institute, an international non-profit that endeavors to make the online world safer for children and families. We also cooperate with the National Center for Missing & Exploited Children (NCMEC), the Tech Coalition, and the Global Internet Forum to Counter Terrorism.

The fight against bad actors on communications platforms is unlikely to end soon, and our approach to safety is guided by the following principles:

- Design for Safety: We make our products safe spaces by design and by default. Safety is and will remain part of the core product experience at Discord.

- Prioritize the Highest Harms: We prioritize issues that present the highest harm to our platform and our users. This includes harm to our users and society (e.g. sexual exploitation, violence, sharing of illegal content) and platform integrity harm (e.g. spam, account take-over, malware).

- Design for Privacy: We carefully balance privacy and safety on the platform. We believe that users should be able to tailor their Discord experience to their preferences, including privacy.

- Embrace Transparency & Knowledge Sharing: We continue to educate users, join coalitions, build relationships with experts, and publish our safety learnings including our Transparency Reports.

Underpinning all of this are two important considerations: our overall approach towards content moderation and our investments in technology solutions to keep our users safe.

Our Approach to Content Moderation

We currently employ three levers to moderate user content on Discord, while mindful of user privacy:

- User Controls: Our product architecture provides each user with fundamental control over their experience on Discord including who they communicate with, what content they see, and what communities they join or create.

- Platform Moderation: Our universal Community Guidelines apply to all content and every interaction on the platform. These fundamental rules are enforced by Discord on an ongoing basis through a mix of proactive and reactive work.

- Community Moderation: Server owners and volunteer community moderators define and enforce norms of behavior for their communities that can go beyond the Discord Community Guidelines. We enable our community moderators with technology (tools like AutoMod) as well as training and peer support (the Discord Moderator Academy).

There is constant innovation taking place within and beyond Discord to improve how companies can effectively scale and deliver content moderation. In the future, our approach will continue to evolve, as we are constantly finding new ways to do better for our users.

Our Technology Solutions

We believe that in the long term, machine learning will be an essential component of safety solutions. In 2021, we acquired Sentropy, a leader in AI-powered moderation systems, to advance our work in this domain. We will continue to balance technology with the judgment and contextual assessment of highly trained employees, as well as continuing to maintain our strong stance on user privacy.

Here is an overview of some of our key investments in technology:

- Safety Rules Engine: The rules engine allows our teams to evaluate user activities such as registrations, server joins, and other metadata. We can then analyze patterns of problematic behavior to make informed decisions and take uniform actions like user challenges or bans.

- AutoMod: AutoMod allows community moderators to block messages with certain keywords, automatically block dangerous links, and identify harmful messages using machine learning. This technology empowers community moderators to keep their communities safe.

- Visual Safety Platform: This is a service that can identify hashes of objectionable images such as child sexual abuse material (CSAM), and check all image uploads to Discord against databases of known objectionable images.

Our Partnerships

In the field of online safety, we are inspired by the spirit of cooperation across companies and civil society groups. We are proud to engage and learn from a wide range of companies and organizations including:

- National Center for Missing & Exploited Children

- Family Online Safety Institute

- Tech Coalition

- Crisis Text Line

- Digital Trust & Safety Partnership

- Trust & Safety Professionals Association

- Global Internet Forum to Counter Terrorism

This cooperation extends to our work with law enforcement agencies. When appropriate, Discord complies with information requests from law enforcement agencies while respecting the privacy and rights of our users. Discord also may disclose information to authorities in emergency situations when we possess a good faith belief that there is imminent risk of serious physical injury or death. You can read more about how Discord works with law enforcement here.

Our Policy and Safety Resources

If you would like to learn more about our approach to Safety, we welcome you to visit the links below.

Some of the most recognizable names in tech such as Snap, Google, Twitch, Meta, and Discord unveiled Lantern, a pioneering initiative aimed at thwarting child predators who exploit platform vulnerabilities to elude detection. This vital project marks a significant stride forward in safeguarding children who on average spend up to nine hours a day online. It underscores a critical truth: protecting our children in the digital realm is a shared responsibility, necessitating a unified front from both tech behemoths and emerging players.

The digital landscape must be inherently safe, embedding privacy and security at its core, not merely as an afterthought. This philosophy propelled my journey to Discord, catalyzed by a profound personal reckoning. In 2017, harrowing discussions about children's online experiences triggered a series of panic attacks for me, leading to a restless quest for solutions. This path culminated, with a gentle nudge from my wife, in the creation of a company that used AI to detect online abuse and make the internet safer for everyone, which was later acquired by Discord. This mission has been and remains personal for me!

Discord, now an eight-year-old messaging service with mobile, web, and stand-alone apps and over 150 million monthly users, transcends being just a platform. It's a sanctuary, a space where communities, from gamers to study groups, and notably LGBTQ+ teens, find belonging and safety. Our ethos is distinct: we don't sell user data or clutter experiences with ads. Discord isn’t the place you go to get famous or attract followers. We align our interests squarely with our users, fostering a safe and authentic environment.

However, our non-social media identity doesn't preclude collaboration with social media companies in safeguarding online spaces. Just as physical venues like airports, stadiums and hospitals require tailored security measures, so too do digital platforms need safety solutions that meet their unique architecture. With all the different ways that kids experience life online, a one-size-fits-all approach simply won’t work. This is not a solitary endeavor but a collective, industry-wide mandate. Innovating in safety is as crucial as in product features, requiring open-source sharing of advancements and knowledge. For example, our team at Discord has independently developed new technologies to better detect child sexual abuse material (CSAM), and have chosen to make them open-source so that other platforms can use this technology without paying a penny.

The tech industry must confront head-on the most severe threats online, including sexual exploitation and illegal content sharing. Parents rightfully expect the digital products used by their children to embody these safety principles. Lantern is a pivotal advancement in this ongoing mission.

Yet, as technology evolves, introducing AI, VR, and other innovations, the safety landscape continually shifts, presenting new challenges that no single entity can tackle alone. The millions the tech sector invests must be used to ensure users on our platforms are actually safe, not to persuade Washington that they’re safe. Our industry can lead by sharing with each other and working collaboratively with legislators, advocacy groups, and academics. Collaboration is key—among tech companies, legislators, advocacy groups, and academics— to ensure a universally safe online experience for our children.

The essence of online safety lies in an unprecedented collaborative effort globally. The launch of Lantern is not just a step but a leap forward, signaling a new era of shared responsibility and action in the digital world. It’s time for us to focus on a safer tomorrow!

Safer Internet Day Fortune Teller

Not sure how to approach difficult or sensitive topics when it comes to talking about your experiences online? Check out our print-at-home fortune teller filled with questions and icebreaker prompts that can help jump start a conversation about better digital health and safer online practices.

What’s Your Community Role Quiz w/NoFiltr

Everyone’s got a role to play in helping make your online communities a safe and inclusive space— wanna find out yours?

For this year’s Safer Internet Day, Discord and NoFiltr, with help from the Youth Innovation Council, are launching the “What’s Your Online Digital Role?” quiz. We believe that everyone can play a part in helping make online communities a safe and inclusive space, and this interactive quiz can help you figure out what role best suits you when it comes to being a part of and building a safe community. Take the quiz here to find out.

Safer Internet Day Partners

We’re committed now more than ever to helping spread the message of Safer Internet Day. In continuing our mission of making your online home a safer one, Discord is partnering with Childnet UK and Internet Sans Crainte, two European Safer Internet Centers dedicated to increasing awareness and education about better online safety practices for youth.

In addition, we’ll be hosting a round table event in Brussels where policymakers, civil society thought leaders, and industry partners will come together to share insights, discuss challenges, and discuss steps we can take together to make Discord and the internet a safer place for young people.

More Safety Resources

Wanna learn more about online safety how you can keep yourself and others safer online? We’ve gathered these resources to help give you a headstart:

- Check out our Safety Center for more resources and guides.

- Are you a Discord community owner? This article from our Community Portal is all about how to keep your Discord Community safer.

- Our friends at NoFiltr have tons of great activities and content to help you navigate your way online.

- Interested in what else is going on for Safer Internet Day? Learn more about Safer Internet Day here!

- Parents can check out ConnectSafely’s Parent’s Guide to Discord.

Together for a better internet

The motto for Safer Internet Day is “together for a better internet.” At Discord, we’re constantly working to make our platform the best place for our users, including teens, to hang out online.

If we want to do this right, we need to ensure people’s voices are heard, especially those of our younger users. We have to engage with youth directly to hear their firsthand experiences and perspectives on how to shape features to meet their needs. That’s why we’re working closely with organizations and teens from around the world to develop an aspirational charter that helps us understand how they want to feel on Discord, while informing the ways we build new products and features to keep them safe on the platform.

A charter for a better place to hang out

We’re consulting the clinical researchers at the Digital Wellness Lab and NoFiltr’s Youth Innovation Council, and engaging our own global focus groups to not only better understand how teens want to feel on Discord, but also what it takes to ensure they feel that way.

Based on our findings, we’ll then partner with teens to co-create a charter that outlines the rights teens have online and the best-case scenarios for authentically expressing themselves and hanging out with friends in digital spaces. While our Community Guidelines explain what isn’t allowed on Discord, these new principles will ultimately guide users to take on a shared responsibility in creating a fun and comfortable space to talk and hang out with their friends.

What we’ve learned so far

Recent focus groups have shown us that teens navigate privacy, safety, and authenticity in nuanced and sophisticated ways – and they expect online platforms to do the same. While our research is only getting started, our teen participants have already emphasized the importance of both transparency and respect on platforms in order to feel safe to be authentically themselves.

“Without privacy, the potential for trust is compromised, preventing the free exchange of ideas and hindering the development of a vibrant and supportive online community.” - NoFiltr’s Youth Innovation Council Member, 14

In the spirit of trust and transparency that teens yearn for, we’ll continue to share our processes of developing this charter openly on our Safety Center.

Added layers of protection with Teen Safety Assist

Along with our work on the charter, we’re also partnering with technology non-profit Thorn to build a set of product features to protect and empower teens. Last fall, we announced our Teen Safety Assist initiative with safety alerts on senders and sensitive content filters, and we’re about to release our next feature: safety alerts in chat.

This new feature, which is enabled by default for teens, will give users additional protection to keep all their spaces comfy on Discord. When we detect a message is potentially unwanted, teen users will receive a safety alert in chat to offer protections like blocking or reporting the sender.

The safety alert in chat will include advice on how to tackle an unwanted situation. Teen users will be encouraged to take a break from the conversation, reach out to Crisis Text Line for live help, or visit NoFiltr.org for more resources and safety tips. The feature will start rolling out for English regions in the coming weeks.

Working together with organizations to make the internet a better, safer place is something we’re committed to doing on Safer Internet Day, and every other day of the year. Discord should be a place where everyone has a home to talk, have fun, and just be themselves — we’re here for you every step of the way.

If you want to learn more about how we approach safety and privacy here at Discord, we have plenty of more resources and articles throughout our Safety Center.

How the Safety Reporting Network Works

To further safeguard our users, the Safety Reporting Network allows us to collaborate with various organizations around the world to identify and report violations of our Community Guidelines. This program helps our team apply a more global perspective to our enforcement activity, providing context for important cultural nuances that allows us to quickly and effectively apply our policies to content and activity across our service.

Members of Discord’s Safety Reporting Network have access to a prioritized reporting channel, and, once our Safety team is made aware of a policy violation, we may take a range of actions, including: removing content, banning users, shutting down servers, and when appropriate, engaging with the proper authorities.

Our Ongoing Commitment

Our Safety Reporting Network is just one component of our overall commitment to safety. We continually invest in technological advancements, like our recently launched Teen Safety Assist and Family Center tools; regularly update our policies; continually invest in building our team; and more. As we continue to improve and evolve our safety efforts, you can visit our Safety Center to learn more about this ongoing work.

Parent & Educator Resources

We want you to feel equipped with the tools and resources to talk to your teens about online safety and understand what safety settings Discord already offers from trusted child safety partners. Check out our current and updated Safety Center’s Parent & Educator Resources:

- Talking about online safety with your teen: Discord is one part of your teen’s online presence. Take a look at this resource to start a conversation about online safety habits, norms, and tactics for your teen’s holistic digital presence and hygiene.

- Helping your teen stay safe on Discord: Learn about all the current safety features and tools at your teen’s disposal to make their Discord experience safer, like limiting who can direct message (DM) them, setting up profanity filters, and more.

- Answer parents and educators’ top questions: You’ve got questions, and we have answers! Find answers to your most common safety, product, and policy questions directly from our teams.

We’re also partnering with child and family safety organizations to build more resources and improve our policies for the long-term. One of our close partners, ConnectSafely recently launched their Parent’s Guide to Discord.

Larry Magid, CEO of ConnectSafely states: "Parents are rightfully concerned about any media their kids are using, and the best way to make sure they're using it safely is to understand how it works and what kids can do to maximize their privacy, security, and safety. That's why ConnectSafely collaborated with Discord to offer a guide that walks parents through the basics and helps equip them to talk with their kids about the service and how they can use it safely."

Student Hubs