Discord has seen tremendous growth. To handle this growth, our engineering team has had the pleasure of figuring out how to scale the backend services.

One piece of technology we’ve seen great success with is Elixir’s GenStage.

The perfect storm: Overwatch and Pokémon GO

This past summer, our mobile push notification system started having a struggle. /r/Overwatch’s Discord had just passed 25,000 concurrent users, Pokémon GO Discords were popping up left and right, and burst notifications became a real issue.

Burst notifications brought the entire push notification system to a slow and sometimes a halt. Push notifications would arrive late or not arrive at all.

GenStage to the rescue

After a bit of investigation, we determined the main bottleneck was sending push notifications to Google’s Firebase Cloud Messaging service.

We realized we could immediately improve the throughput by sending push requests to Firebase via XMPP rather than HTTP.

Firebase XMPP is a bit trickier than HTTP. Firebase requires that each XMPP connection has no more than 100 pending requests at a time. If you have 100 requests in flight, you must wait for Firebase to acknowledge a request before sending another.

Since only 100 requests can be pending at a time, we needed to design our new system such that XMPP connections do not get overloaded in burst situations.

From an initial glance, it seemed that GenStage would be a perfect fit for our problem.

GenStage

So what’s GenStage?

GenStage is a new Elixir behaviour for exchanging events with back-pressure between Elixir processes. [0]

What does that mean, really? Basically, it gives you the tools needed to make sure no part of your system gets overloaded.

In practice, a system with GenStage behaviours normally has several stages.

Stages are computation steps that send and/or receive data from other stages.

When a stage sends data, it acts as a producer. When it receives data, it acts as a consumer. Stages may take both producer and consumer roles at once.

Besides taking both producer and consumer roles, a stage may be called “source” if it only produces items or called “sink” if it only consumes items. [1]

The approach

We split the system into two GenStage stages. One source and one sink.

- Stage 1 — the Push Collector: The Push Collector is a producer that collects push requests. There is currently one Push Collector Erlang process per machine.

- Stage 2 — the Pusher: The Pusher is a consumer that demands push requests from the Push Collector and pushes the requests to Firebase. It only demands 100 requests at a time to ensure it does not go over Firebase’s pending request limit. There are many Pusher Erlang processes per machine.

Back-pressure and load-shedding with GenStage

GenStage has two key features that aide us during bursts: back-pressure and load-shedding.

Back-pressure

In the Pusher, we use GenStage’s demand functionality to ask the Push Collector for the maximum number of requests the Pusher can handle. This ensures an upper bound on the number of push requests the Pusher has pending. When Firebase acknowledges a request, the Pusher demands more from the Push Collector.

The Pusher knows the exact amount the Firebase XMPP connection can handle and never demands too much. The Push Collector never sends a request to a Pusher unless the Pusher asks for one.

Load-shedding

Since the Pushers put back-pressure on the Push Collector, we now have a potential bottleneck at the Push Collector. Super-duper huge bursts might overload the Push Collector.

GenStage has another built-in feature to handle this: buffered events.

In the Push Collector, we specify how many push requests to buffer. Normally the buffer is empty, but about once a month in catastrophic situations it comes in handy.

If there are way too many messages moving through the system and the buffer fills up then the Push Collector will shed incoming push requests. This comes for free from GenStage by simply specifying the buffer_size option in the init function of the Push Collector.

With these two features we are able to handle burst notifications.

The code (the important parts, at least)

Below is example code of how we set up our stages. For simplicity, we removed a lot of failure handling for when connections go down, Firebase returns errors, etc.

You can skip the code if you just want to view the results of the system.

Push Collector (the producer)

Pusher (the consumer)

An example incident

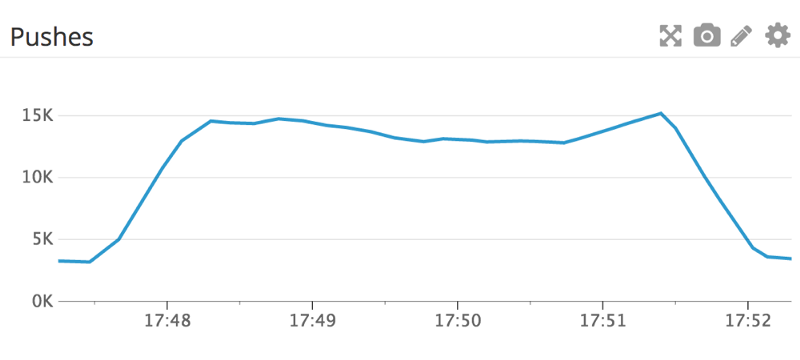

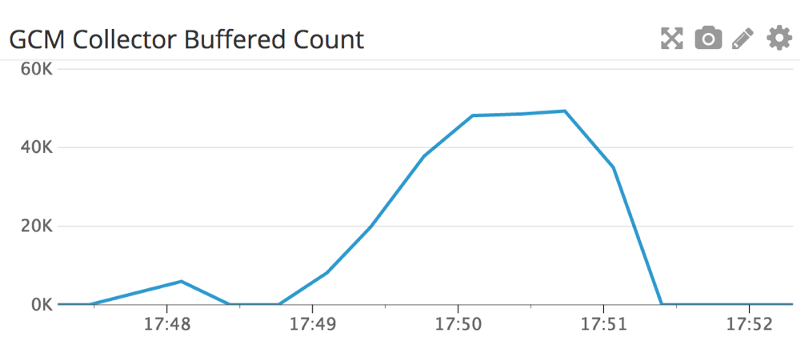

Below is a real incident the new system handled. The top graph is the number of push requests per second flowing through the system. The bottom graph is the number of push requests buffered by the Push Collector.

Note: These graphs are taken from the first incident after we deployed the new system. Our pushes per second have more than doubled since then. Also, we only send pushes when users are not active on the application.

Order of events:

- ~17:47:00 — The system is nominal.

- ~17:47:30 — We start receiving a burst of messages. The Push Collector has a small blip in its buffer count as the Pusher’s react. Shortly after, the buffer goes down for a little bit.

- ~17:48:50 — The Pushers cannot send messages to Firebase faster than they are coming in, so the buffer in the Push Collector starts filling up.

- ~17:50:00 — The Pusher Collector buffer starts peaking and sheds some requests.

- ~17:50:50 — The Pusher Collector buffer stops peaking.

- ~17:51:30 — The requests peak then slow down.

- ~17:52:30 — The system is completely back to normal.

During this entire incident there was no noticeable impact to the system or users. Obviously a few notifications were dropped. If a few notifications weren’t dropped, the system may never have recovered, or the Push Collector might have fallen over. We find this to be an acceptable compromise for something like notifications.

Elixir’s Success

At Discord we have been very happy using Elixir/Erlang as a core technology of our backend services. We are pleased to see additions such as GenStage that build on the rock solid technologies of Erlang/OTP.

We are looking for brave souls to help solve problems like these as Discord continues to grow. If you love games and these types of problems make you super excited, we’re hiring! Check out our available positions here.

.png)

.png)

Nameplates_BlogBanner_AB_FINAL_V1.png)

_Blog_Banner_Static_Final_1800x720.png)

_MKT_01_Blog%20Banner_Full.jpg)

.png)

.png)